Internet image collections containing photos captured by crowds of photographers show promise for enabling digital exploration of large-scale tourist landmarks. However, prior works focus primarily on geometric reconstruction and visualization, neglecting the key role of language in providing a semantic interface for navigation and fine-grained understanding. In more constrained 3D domains, recent methods have leveraged modern vision-and-language models as a strong prior of 2D visual semantics. While these models display an excellent understanding of broad visual semantics, they struggle with unconstrained photo collections depicting such tourist landmarks, as they lack expert knowledge of the architectural domain and fail to exploit the geometric consistency of images capturing multiple views of such scenes. In this work, we present a localization system that connects neural representations of scenes depicting large-scale landmarks with text describing a semantic region within the scene, by harnessing the power of SOTA vision-and-language models with adaptations for understanding landmark scene semantics. To bolster such models with fine-grained knowledge, we leverage large-scale Internet data containing images of similar landmarks along with weakly-related textual information. Our approach is built upon the premise that images physically grounded in space can provide a powerful supervision signal for localizing new concepts, whose semantics may be unlocked from Internet textual metadata with large language models. We use correspondences between views of scenes to bootstrap spatial understanding of these semantics, providing guidance for 3D-compatible segmentation that ultimately lifts to a volumetric scene representation. To evaluate our method, we present a new benchmark dataset containing large-scale scenes with ground-truth segmentations for multiple semantic concepts. Our results show that HaLo-NeRF can accurately localize a variety of semantic concepts related to architectural landmarks, surpassing the results of other 3D models as well as strong 2D segmentation baselines.

Our goal is to perform text-driven neural 3D localization for landmark scenes captured by collections of Internet photos. In other words, given this collection of images and a text prompt describing a semantic concept in the scene, we would like to know where it is located in 3D space. These images are in the wild, meaning that they may be taken in different seasons, time of day, viewpoints, and distances from the landmark, and may include transient occlusions.

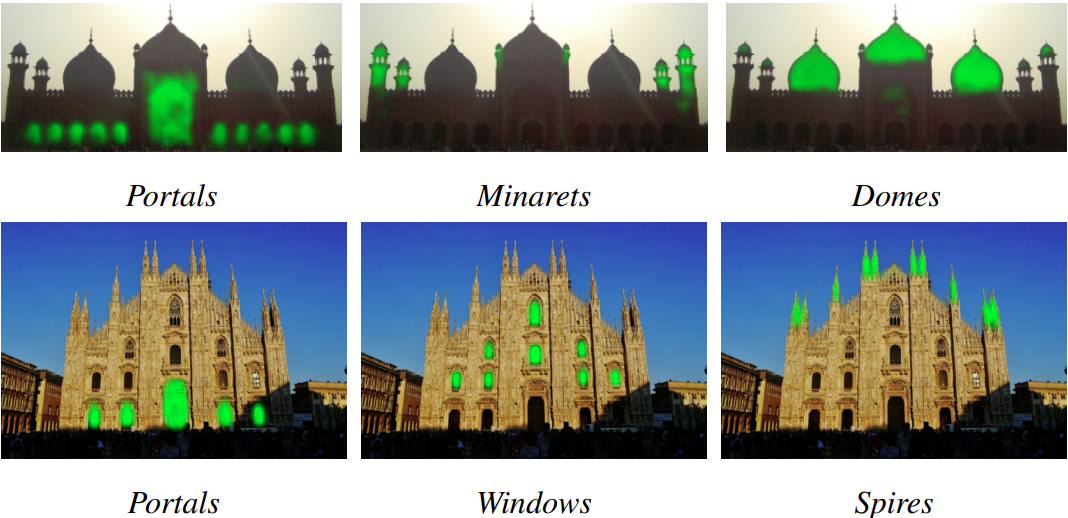

In order to localize unique architectural features landmarks in 3D space, we leverage the power modern foundation models for visual and textual understanding. Despite progress in general multimodal understanding, modern VLMs struggle to localize fine-grained semantic concepts on architectural landmarks, as we show extensively in our results. The architectural domain uses a specialized vocabulary, with terms being rare in general usage.

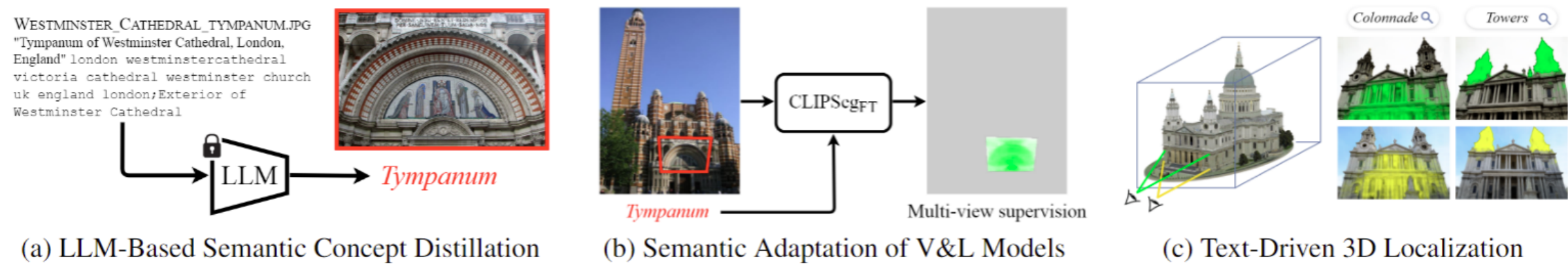

To address these challenges, we design a three-stage system: (a) We extract semantic pseudo-labels from noisy Internet image metadata using a large language model (LLM). (b) We use these pseudo-labels and correspondences between scene views to learn image-level and pixel-level semantics. In particular, we fine-tune an image segmentation model (CLIPSegFT) using multi-view supervision—where zoomed-in views and their associated pseudo-labels (such as image on the left associated with the term “tympanum”) provide a supervision signal for zoomed-out views. (c) We then lift this semantic understanding to learn volumetric probabilities over new, unseen landmarks (such as the St. Paul’s Cathedral depicted on the right), allowing for rendering views of the segmented scene with controlled viewpoints and illumination settings.In addition, we show below visualizations, comparing HaLo-NeRF (left) with the Baseline model (right), which uses the CLIPSeg model without finetuning. Both videos show the same temporal sequence of RGB renderings, varying only in the probabilities depicted (taken either from our model or the baseline). Note that once zoomed-in, we turn off the probabilities for both models, allowing to better view the target semantic region. The target text prompt is written above each video, with the name of the landmark on the right. As illustrated below, our model yields significantly cleaner probabilities that better localize the semantic regions, particularly for unique concepts that are less common outside of the domain of architectural landmarks. We also visualize the zoomed-in region with multiple appearance (for our model, keeping the appearance of the baseline model fixed). Results over additional prompts and landmarks from the HolyScenes benchmark are illustrated in the main paper.

@InProceedings{dudai2024halonerf,

author = {Dudai, Chen and Alper, Morris and Bezalel, Hana and Hanocka, Rana and Lang, Itai and Averbuch-Elor, Hadar},

title = {HaLo-NeRF: Learning Geometry-Guided Semantics for Exploring Unconstrained Photo Collections},

booktitle = {Proceedings of the Eurographics Conference (EG)},

year = {2024}

}